Are Today's Websites Ready for AI Agent Traffic?

Data Analysis

Are today’s websites ready for AI agent traffic? We ran repeated simulations with ChatGPT Agent Mode to identify what's blocking agent discovery and conversion ✋🏼

2025 has been the year of the AI agent, and companies like OpenAI are investing heavily in both their own agentic products and the ecosystem at large. Just yesterday they announced an Agent Builder UI and slew of tooling to build and deploy agentic workflows & apps.

Consumers are already offloading product discovery and research tasks to AI agents that browse the open web, but most business websites today are poorly positioned to be discovered effectively.

The test

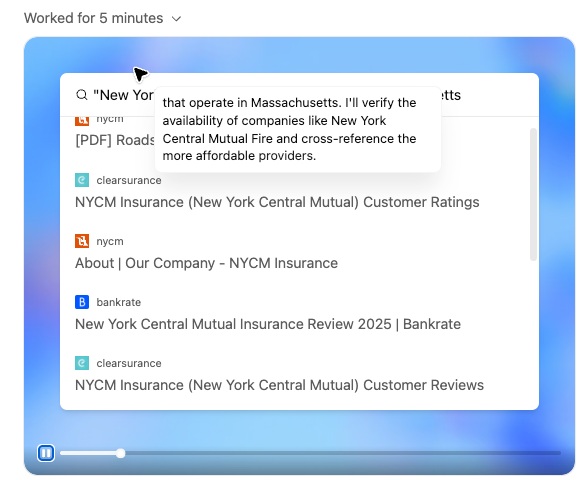

As an experiment, we repeatedly prompted ChatGPT's Agent Mode to find customized car insurance quotes. While it provided useful results after scouring the web and visiting 50+ sites each time, what surprised me was how often it got stuck and abandoned websites that were built for humans, not robots. Each simulation faced an average of 5.6 issues that caused the agent to abandon its current visit and try another site. Here are the top issue categories:

| Problem | Prevalence | Examples |

|---|---|---|

| Access issues | 25% | Page blocked, restricted (403), or inaccessible due to permissions |

| Content too long | 21% | Article or site too long to parse |

| Dynamic content | 21% | Data hidden behind interactive or JavaScript-rendered elements |

| Location issues | 11% | Blocked due to geographic restrictions (e.g. IP-based filtering) |

| Design limitations | 11% | Layout or structure prevented reliable data extraction |

| Other | Slow loading, network issues, etc. |

Technical observations

It was interesting to see the agent switch between making direct network requests (likely via ChatGPT-User bot) and spinning up a browser to act more human-like by analyzing screenshots, clicking elements, and filling forms.

While everything was in Agent Mode, it makes sense that ChatGPT opts to make some searches/requests directly through its bots rather than use the browser, which can be slow and expensive. However, when not in browser mode, the agent is much less capable—it's well documented that most AI bots/crawlers do not run JavaScript, hence the issues with dynamic content.

What does this means for businesses?

While many sites were parsed successfully, the high issue and abandonment rate means significant potential customers and revenue left on the table for businesses as agents become more popular.

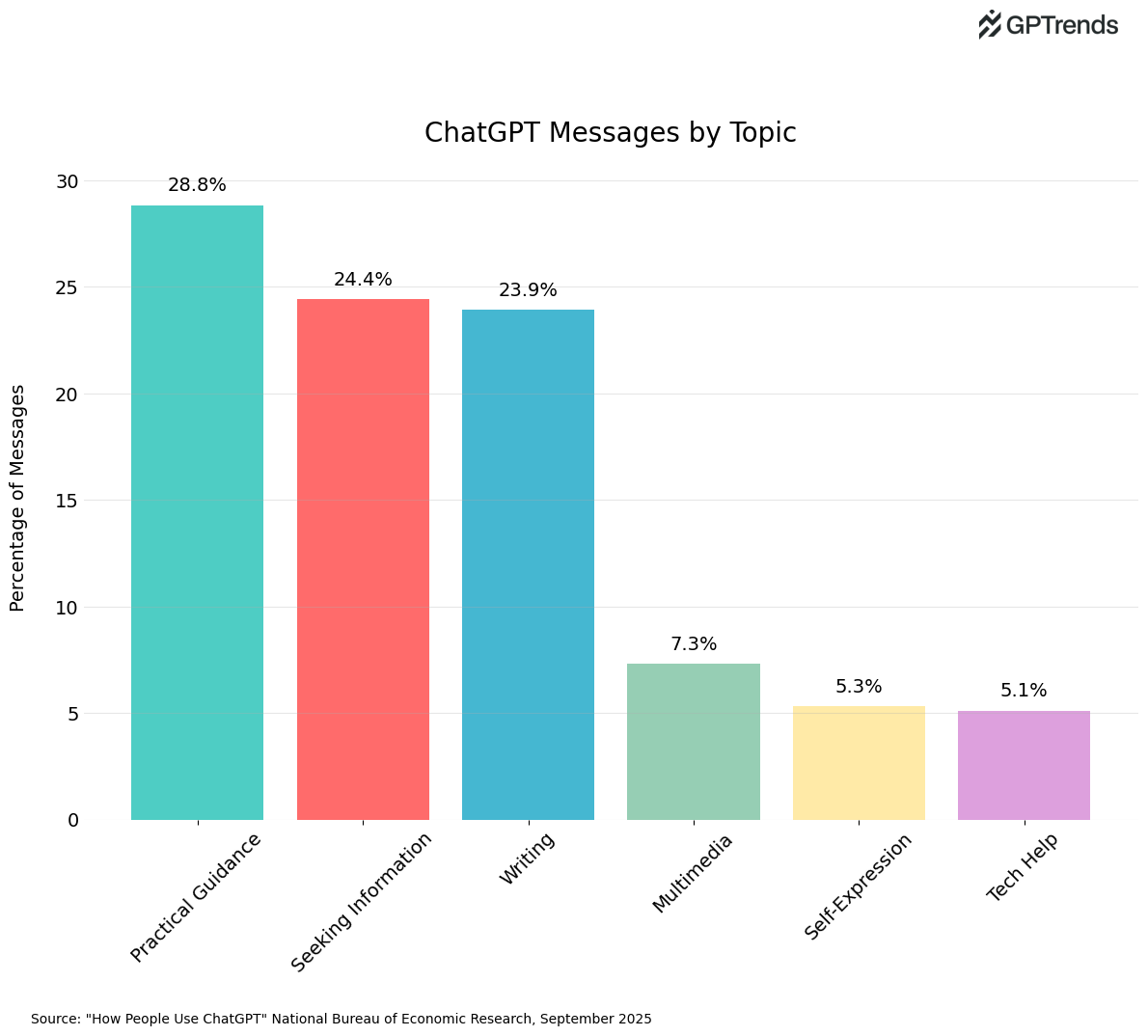

According to Cloudflare, ~29% of web traffic is already non-human (bots, crawlers, agents, etc.). So what are the implications for websites and discovery if people employ agents rather than visiting directly?

- Implement accessible and efficient ways to communicate product offering and value: structured data, agent verification mechanisms, open APIs, etc.

- Rethink analytics: if direct human traffic is replaced by agents, how do you assess the quality and success of a visit? New monitoring methods and metrics (e.g., agent sessions and conversion funnels) will likely be necessary. Today's analytics tools like Google Analytics, Amplitude, etc aren't well positioned to measure this.

Duane Forrester put it well in a recent post:

Building and maintaining a site will increasingly mean structuring it for agents to retrieve from, not just for people to browse.

The web was built for human browsers. That assumption still holds. But the most valuable traffic may increasingly come from elsewhere.

PS: At GPTrends, we're building Agent Analytics to help companies identify and resolve these issues, boost discovery in AI chat apps, and ensure content efforts don't go to waste.

Discover more