The AI Crawler Optimization Guide to Increase Site Visibility

GEO Tech

This blog is a watered-down version of our technical Notion guide on AI Crawlers. Into Technical SEO? We suggest you read the full guide instead of this blog!

Everyone’s rushing to add an llms.txt file to their site because they saw it on LinkedIn. Let’s be honest, that one move won’t magically improve your brand's visibility in AI search.

From our research and extensive testing with our customers, it’s clear that AI crawlers operate differently from traditional SEO crawlers. While Google can execute JavaScript, build the DOM, and index dynamic content, most AI bots still read your raw HTML and stop there. No scripts, no dynamic loading, no fancy front-end trickery. Unlike Google, which can process complex websites and dynamic content, most AI crawlers only look at the basic HTML version of your page. They skip scripts, dynamic loading, and anything fancy on the front end.

The key is to adapt your setup to the way AI crawlers work.

In this blog, we’ll quickly cover six technical factors that can make or break your brand’s visibility in AI search. Implementing these improvements usually requires a technical SEO specialist or website admin.

For those who want the full technical deep dive, we’ve prepared a detailed Notion guide; you can find it here. Not technical? We suggest you read this blog first, then share the guide with your team to get them started!

1. Is your website AI search–friendly? 🤖

Before you think about optimizing for AI search, you need to know if your tech stack is AI crawler friendly. Unlike Google, most AI bots don't run JavaScript and only read the initial HTML.

Examples of friendly stacks are:

- WordPress - old but gold, but PHP-powered frameworks are generally good to go!

- Next.js – as long as Server-Side Rendering (SSR) or Static Site Generation (SSG) is enabled.

- Statistically generated content - plain HTML, or Hugo, Gatsby, Astro, Jekyll ect. for when you want to keep it simple

- Site builders - Framer, Webflow, Wix, Builder.io, and the other ten thousand options.

More importantly, let's look at the unfriendly stacks:

- Standalone React with CSR (Client-side rendering) – Legacy Create React App (CRA), Vite + React, or standalone React Router.

- Standalone Vue with CSR – Vue CLI, Vite + Vue, or standalone Vue Router.

- Angular and other frameworks with CSR – Angular CLI, or Svelte without SvelteKit.

Working with an unfriendly stack? Ask your developer to migrate at least the homepage to SSR or make it static.

Not sure what stack you’re running?

This tool will tell you in seconds. Got questions about your stack or crawler visibility? Contact us at [email protected].

2. Internal linking: clear robot pathways 🔗

Your site needs clear navigation for both humans and robots alike. Smart internal linking connects your key pages, making them easy to discover from multiple paths. Your goal: connect your most valuable content through several routes so it’s never hidden.

Step 1: Build topic clusters

Think in terms of topic clusters: one broad “pillar” page with related subpages diving into specific topics, often referred to as ‘’Breadcrumb paths. For example:

Topic Cluster: "Digital Marketing"

├── Pillar Page: Complete Guide to Digital Marketing

├── SEO Fundamentals

├── Content Marketing Strategies

├── Social Media Marketing

└── Email Marketing Best Practices

├── Newsletter Design Tips

├── Automation Workflows

└── A/B Testing for EmailsStep 2: Connect your key pages

Make sure pivotal posts have multiple entry points. Breadcrumbs, “Related” sections, or footer links work wonders.

Step 3: Highlight What Matters Most

Focus on your highest-value content. The more paths that lead to important pages, the more chance crawlers will find them. Try not to overdo it, though, since that will lead to a worse experience for us humans.

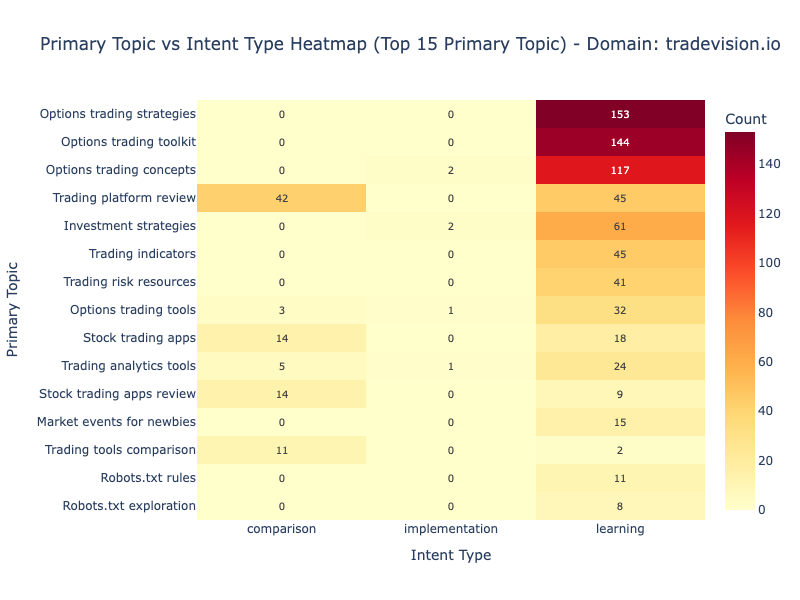

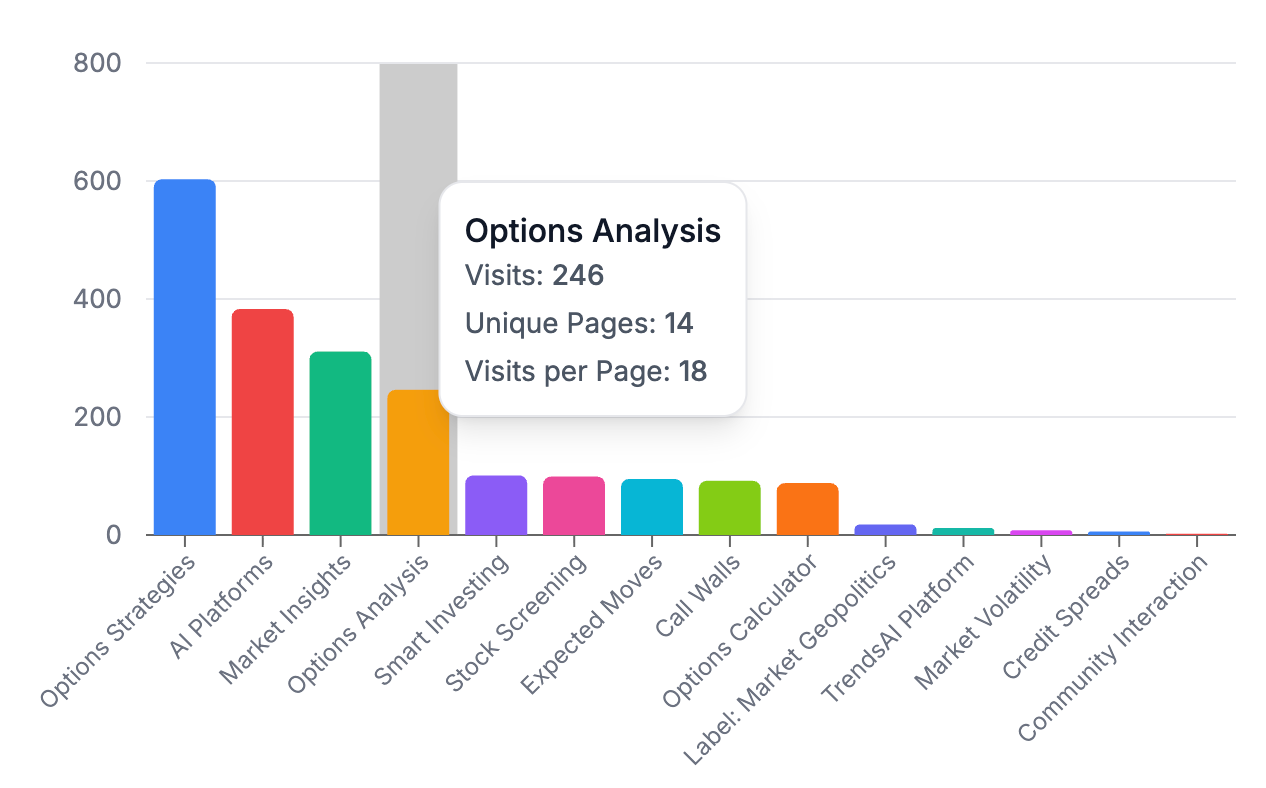

If you’re unsure which pages are most important on your website, examine server logs to see which pages are crawled the most by AI bots and focus your attention there.

At GPTrends, we’ve also developed a tool to help you determine which pages and content types to create and update.

3. Sitemap and Robots.txt: The crawler communication hub

A well-structured sitemap prevents dead links and ensures your most important content gets indexed properly. Here are our steps for creating a well-organized crawler communication hub. Here are the three steps

Step 1: Categorize Content by Update Frequency

When building your sitemap, assign the

Step 2: Build a Relevant Sitemap

Your sitemap should follow the Google specification and clearly signal the essentials: the page URL (

A good sitemap only includes live pages, so remove broken links right away. For sites with dynamic content, automate updates so crawlers always see the latest version without manual work.

Step 3: Optimize Robots.txt

Your robots.txt file should guide crawlers to the right content while keeping them away from unnecessary or duplicate pages. Always include a reference to your sitemap so it’s easy to discover. Block admin areas or search/filter pages, but ensure that important sections, such as your blog, remain accessible. A clean robots.txt set up not only helps crawlers find your sitemap faster but also ensures they spend time indexing the content that actually matters. This is what a good Robots.txt should look like:

4. LLMs.txt Specification: The New Standard for AI Communication

LLMs.txt is a standardized file that communicates your site’s structure, content hierarchy, and crawling preferences to AI systems. While still just a proposal and not as impactful as robots.txt, llm.txt is an emerging standard for AI content discovery. It's very low effort to implement, and it's always better to be safe than sorry. Spend 15 minutes checking out llmstxt.org, it’s a high-ROI read.

The basic steps to implementing LLMs.TXT can be found in our Notion guide.

Tip: Combining llms.txt A well-structured sitemap and internal linking ensure both humans and AI can navigate your site effortlessly.

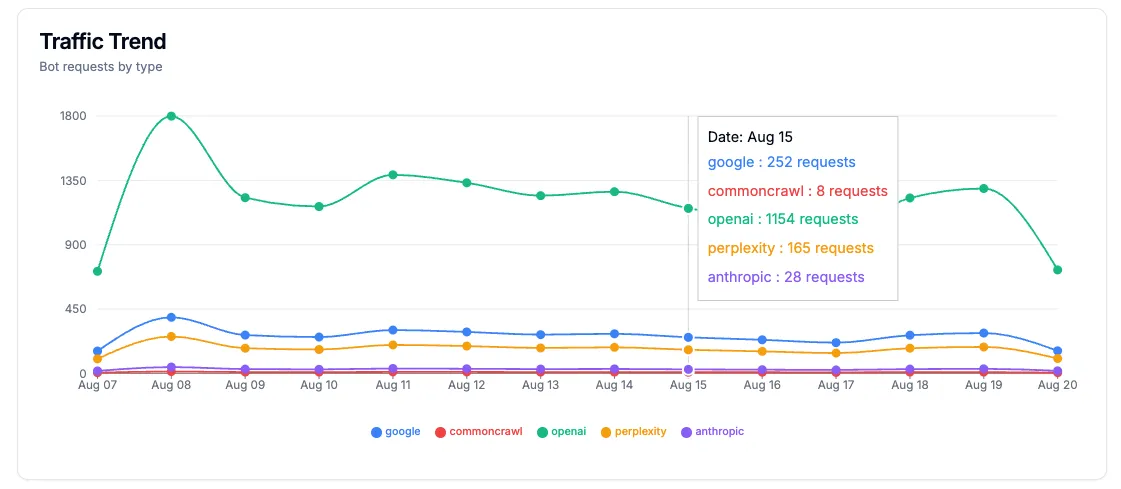

5. AI crawlers analytics 📊

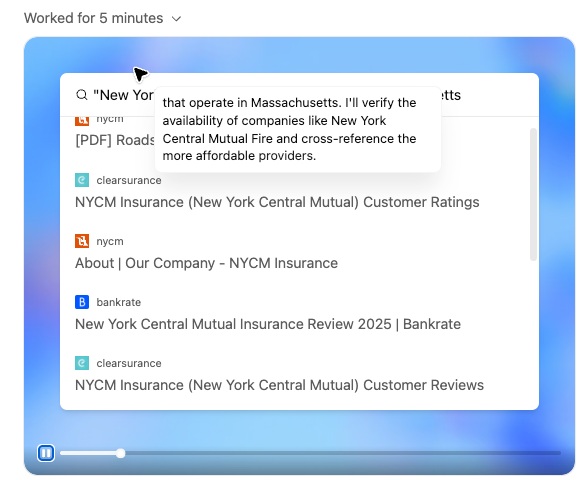

AI crawlers behave differently from human visitors, so traditional tracking methods won’t work. Here’s how to monitor their activity effectively.

Bot requests by crawler in GPTrends

- Forget JavaScript Tracking. AI crawlers don’t render JavaScript, so tools like Google Analytics won’t capture their activity.

- What About Pixel Tracking? Pixels won’t help either. Most crawlers only process text; very few consider images. As of August 2025, your pixel will likely be ignored. We’ll update you once this changes.

- Go Server-Side. To reliably track AI crawler traffic, server-side tracking is the way to go. Analyze request headers and log visits before sending the page.

🤖 At GPTrends, we handle bot tracking on your site with a easy to install code snippet. Find more info about Bot Analytics here or reach out to us explore your integration options.

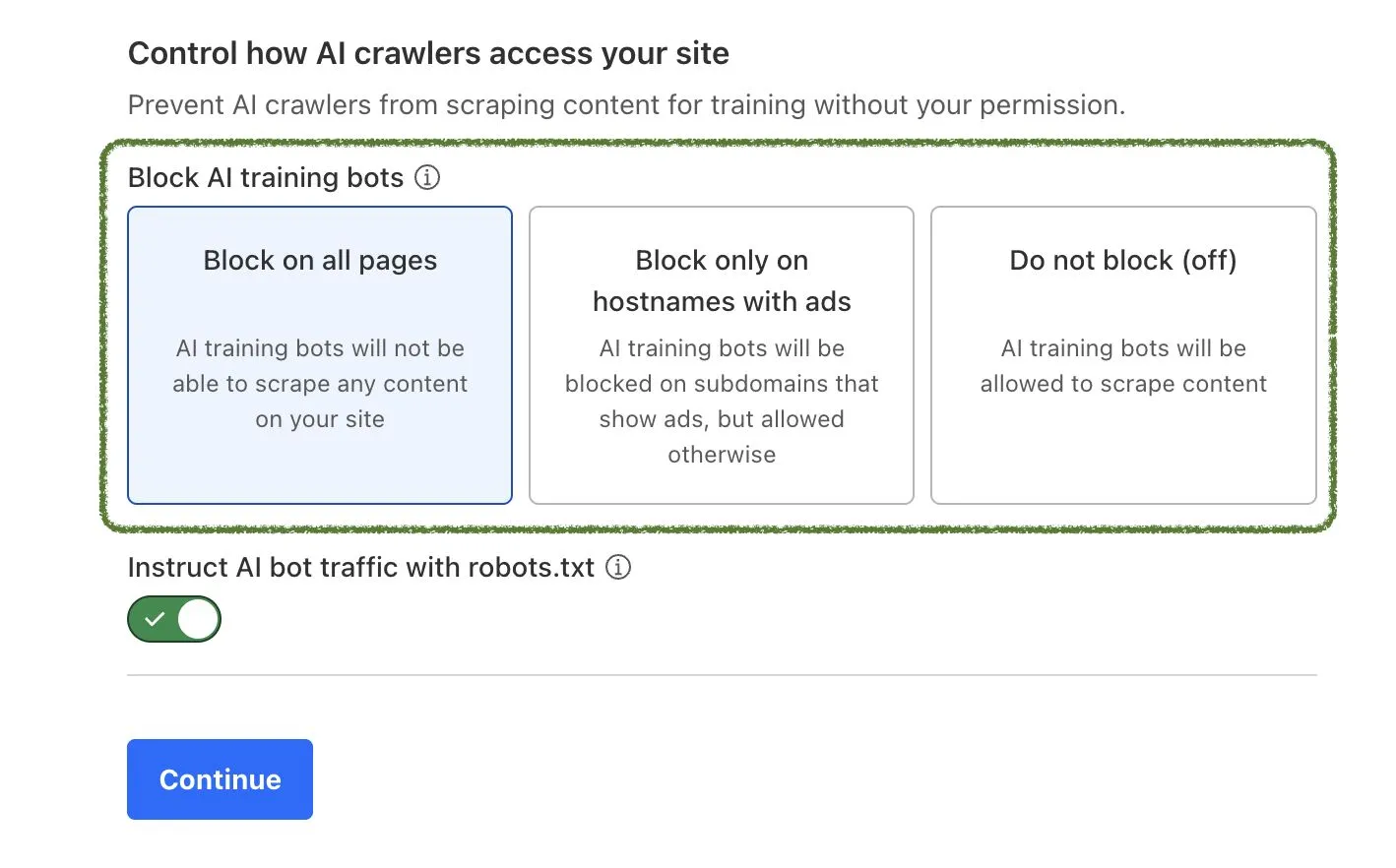

6. Cloudflare’s fight against AI training bots

Cloudflare recently started automatically blocking AI training bots from crawling its customers’ content by default. Since these bots account for roughly 20% of internet traffic, this is a significant shift.

Publishers may welcome the change, as it protects their intellectual property from being used without permission. On the other hand, many companies want AI bots to access their content, aiming for higher visibility in AI-powered recommendations. Some might even consider paying for it.

Cloudfare AI crawler access settings

If you’re a Cloudflare customer, check your bot-blocking settings to ensure your content is discoverable by AI apps. This is a rapidly evolving area, so stay informed by following us on LinkedIn, as we share any updates on such matters there as well.

Final note

This is what we consider Step 0 of GEO (Generative Engine Optimization), making sure AI systems can find and understand your site in the first place. Do this right, and your content becomes easier to find, properly indexed, and far more likely to be surfaced in AI recommendations, putting you ahead of competitors who haven’t adapted yet. Share the full Notion Guide with your technical SEO person.

At GPTrends, we go beyond giving you actionable insights. Our strength lies in the technical implementation that ensures you’re not just optimized, but future-proof.

If you want to put these recommendations into practice, GPTrends can help you run the audits and tracking automatically. Try it free for 7 day here.

Discover more